Benchmarking KVM/ZFS on Ubuntu 18.04 (raidz2)

Oct 29, 2018

Host

- Xeon E3-1230 V3 @ 3.3GHz (turbo off)

- 32 GB DDR3 1600 MHz

- Supermicro X10SLM-F

- 4 x 1TB Seagate Constellation ES.3 (raidz2)

- 128 GB Samsung USB 3.1 flash drive (host)

vm.swappiness=0/vm.vfs_cache_pressure=50- ksm, hugepages, zwap

- CFQ scheduler

- Ubuntu 18.04.1 x64

I redid some of the tests with an mdadm RAID 10 array of 240 GB SSDs and the results were nearly identical. It’s possible the virtio drivers or Windows version I used was causing strange storage performance.

I re-ran none/threads under a Debian 9 host / Server 2008 R2 and the sequential reads/writes are great. 1455 MB/s / 548 MB/s sequentials. Everything else looks good too. Very strange.

I don’t intend to use ZFS for VMs at the moment due to lack of encryption support and some annoyances like Ubuntu not booting half the time because ZFS datasets won’t mount correctly.

VM

- 4 cores / 4 threads

- 8 GB RAM

- 120 GB zvol / qcow2, lz4, relatime

- virtio

- No page file, optimize for programs, DEP off

- Windows Server 2016

I’ll be using CrystalDiskMark (CDM) for benchmarking under Windows.

zvol

none / threads

Memory usage spiked up to 30.9 GB and crashed the host while doing the write test.

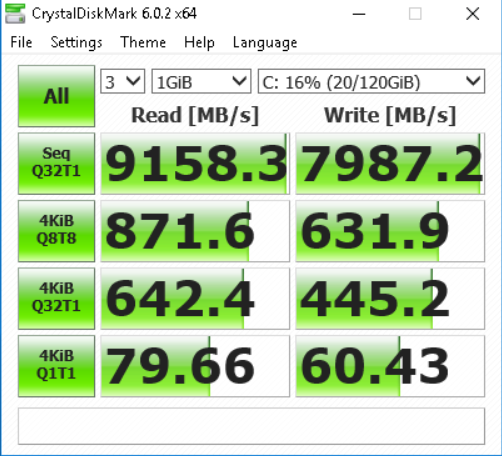

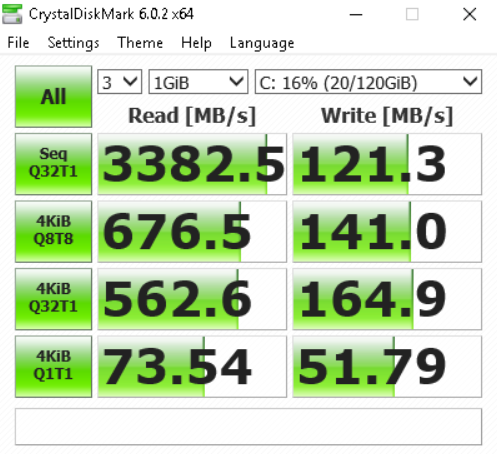

writeback / threads

Remote desktop died but the test continued. Memory usage kept hitting 17 GB from 12.8 GB.

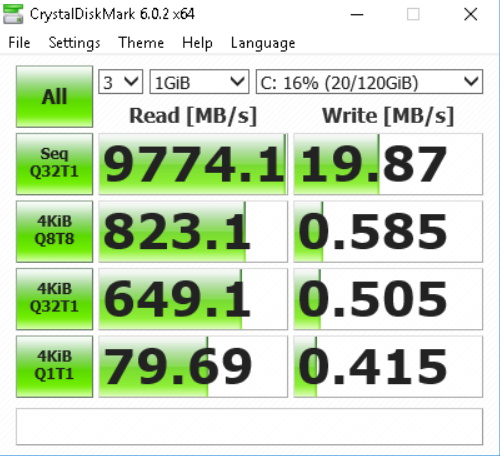

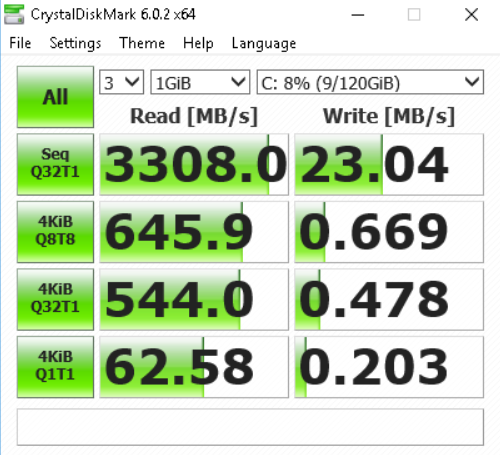

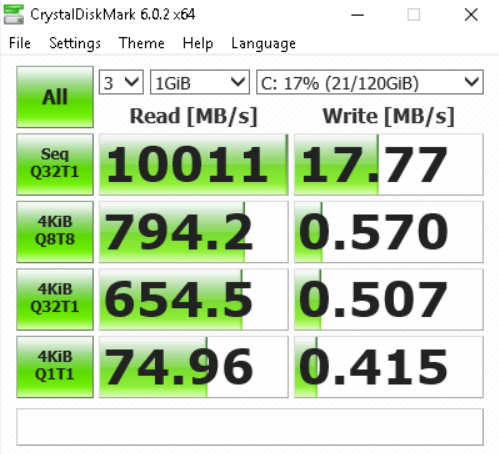

writethrough / threads

I can immediately tell that this is faster than writeback. The VM started quicker and loaded the server config tool faster. However, the write speeds are absolutely awful. Memory usage was a consistent 12.8 GB during the entire test.

This seems close to what an individual drive is capable of except the sequential writes should be a lot higher. Strange.

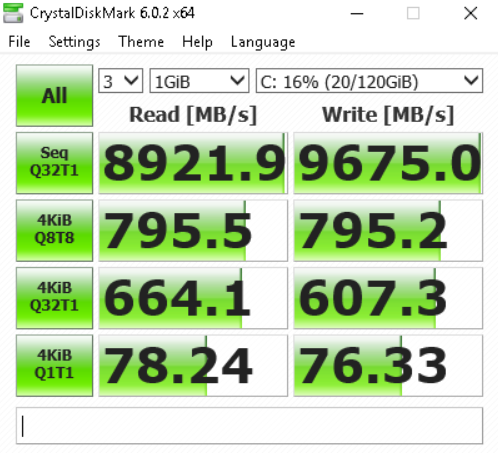

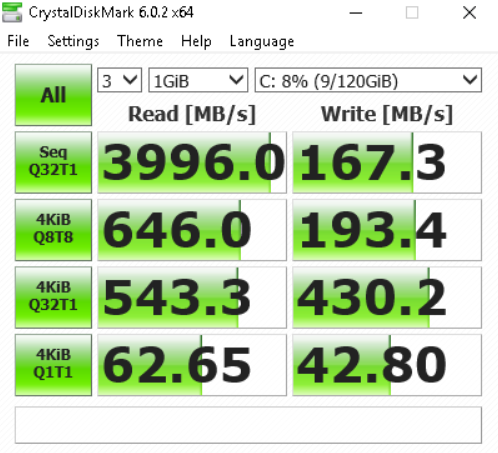

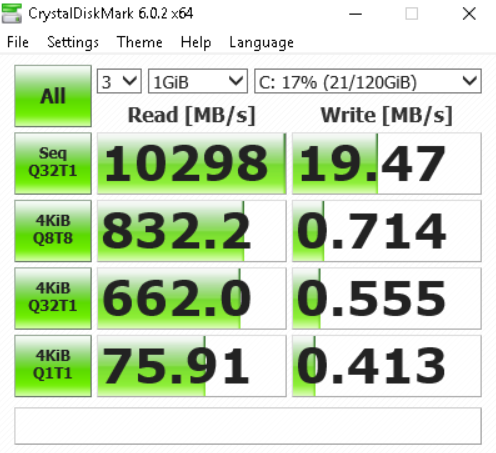

directsync / threads

Appears to be really fast just like writethrough. I remember testing this option before with mdadm RAID 10 under a Linux VM and it was extremely slow. With ZFS it appears to be different. Consistent 12.8 GB memory usage as well.

Overall it looks like the performance is slightly worse than writethrough.

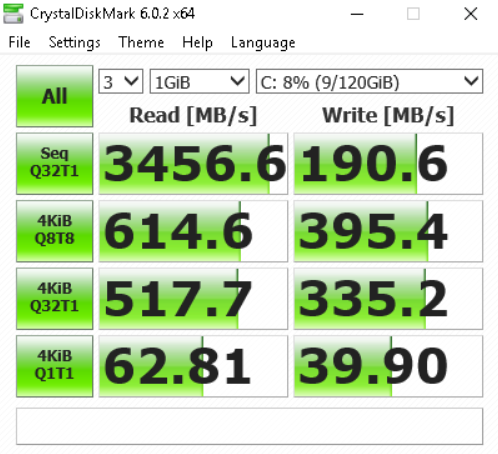

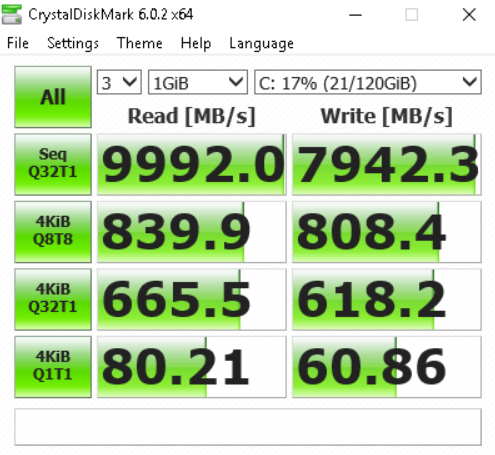

unsafe / threads

This is supposedly similar to writeback. However, the OS and programs loaded faster than writeback. Memory usage peaked around 14 GB which is lower than writeback.

https://www.suse.com/documentation/sles11/book_kvm/data/sect1_1_chapter_book_kvm.html

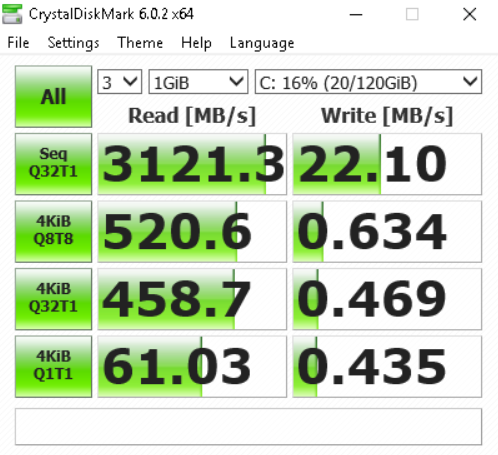

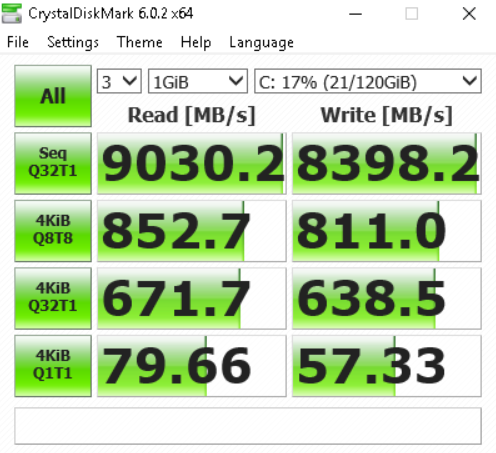

none / native

This is the option I use on a production server. I had severe performance issues while doing lots of random I/O in a Linux VM with “threads”.

Memory usage peaked at 30 GB. It slowly came down as it was flushed to disk. Sequential write speeds are that of a single SATA 3 hard drive. Even though it is higher than ‘writethrough’ from above, the test took longer to complete.

So far this option appears to be the best

directsync / native

Took forever to boot. Not worth testing.

qcow2 on dataset

zfs set compression=lz4 local/vms

zfs set xattr=sa local/vms

zfs set recordsize=8K local/vms

qemu-img create -f qcow2 -o preallocation=full server2016.qcow2 120G

none / threads

Not supported.

writethrough / threads

Similar performance to the zvol.

writeback / threads

Reads are half compared to the zvol with writeback. Writes are a little better than a standard hard drive. Memory usage dropped when the write test started. ZFS freeing the cache?

unsafe / threads

Reads are slower than writeback but sequential writes are slightly improved. Windows appears to be snappier using unsafe no matter if it’s a zvol or qcow2 image.

native

Not supported.

Schedulers

The tests above were conducted using the default cfq scheduler. I will only be testing zvols for this part.

noop

This is usually the best scheduler for SSDs and not spinning disks.

none / native

Ran out of memory. native with no cache does not appear to be safe with limited memory.

writethrough / threads

Sequential reads are a lot higher because the requests are not being re-ordered. Writes do suffer.

unsafe / threads

deadline

writethrough / threads

Marginally better than noop. In theory this scheduler should be better suited towards VMs than CFQ when lots of requests are being made.

unsafe / threads

No real difference between noop and deadline for this.

bfq

I’m not sure if I got it working correctly. After I enabled the kernel option all of the other schedulers were removed and the VMs were very slow. I’ve used it on Arch before and never noticed anything weird.

Conclusion

unsafe is clearly the fastest and best option if you want speed without caring about data integrity. writeback is fast but it uses more memory.

qcow2 is slower than using a zvol but has some benefits like thin provisioning and being able to specify an exact file name. With zvols they sometimes change on reboot.

writethrough is surprisingly snappy in Windows despite the write tests showing poor performance.

native offers good performance according to CDM however memory usage will peak and slow the performance. This option causes Windows and programs to load slower. Simple benchmarks are not telling the whole story. It may cause the host to crash which means I would not recommend using it.

No matter what option you use, read performance is good with zfs + KVM. It’s always the writes that are the problem.